Usability testing methods

Usability testing isn't one-size-fits-all, and understanding which method serves your specific research goals helps you gather insights that actually drive better product decisions.

Usability testing guide

Choosing the right usability testing method can make the difference between gathering actionable insights and wasting valuable time and resources. With dozens of different approaches available, from quick five second tests to comprehensive moderated sessions, the key is understanding which method serves your specific research goals.

Research shows that 88% of users are less likely to return to a site after a bad user experience, making usability testing one of the most critical investments you can make in your product's success. Yet many teams struggle with method selection, often defaulting to familiar approaches rather than choosing the most effective one for their situation.

This guide provides a comprehensive overview of usability testing methods, helping you understand when and how to use each approach to gather the insights that matter most for your product decisions.

Start testing with the right method

Try multiple usability testing methods in one platform. Sign up for free and run your first test today.

Overview of usability testing methods

Usability testing isn't a single approach – different methods serve different goals, budgets, and product stages. Think of usability testing methods as tools in a researcher's toolkit, each designed for specific situations and research questions.

At its core, usability testing evaluates how easily users can accomplish tasks with your product. However, the way you conduct this evaluation can vary dramatically based on what you're trying to learn, who your users are, and what resources you have available.

Some methods focus on gathering quantitative data about task completion rates and time-on-task, while others prioritize qualitative insights about user frustrations and motivations. Some require significant time and budget investments, while others can be completed in minutes with minimal resources.

The most successful research programs combine multiple methods strategically, using each approach when it provides the most value. Rather than relying on a single testing method, effective researchers build a testing cadence that evolves with their product and team needs.

Our video below goes into more detail.

Why there are different usability testing methods

Not all tests answer the same questions, and understanding these differences is crucial for selecting the right approach for your research goals.

Some usability testing methods focus on first impressions and initial user reactions. These tests help you understand whether users immediately grasp what your product does and whether it creates a positive initial experience. Methods like five second testing excel at capturing these immediate reactions.

Other methods prioritize task success and workflow efficiency. These approaches evaluate whether users can complete specific actions successfully and efficiently. Moderated task-based testing and unmoderated usability testing are particularly effective for understanding task completion patterns.

Still other methods examine navigation clarity and information architecture. These tests help you understand whether users can find what they're looking for and whether your site's organization makes sense to them. Card sorting, tree testing, and first-click testing are specifically designed to evaluate these structural elements.

Finally, some methods focus on preference and comparison between different design options. These approaches help you make decisions between competing designs or understand which elements resonate most with users.

The method you choose should align with the specific questions you're trying to answer. Asking the wrong type of question with a particular method often leads to inconclusive or misleading results.

High-level categories of usability testing methods

Understanding the fundamental categories of usability testing methods helps you navigate the various options and select the most appropriate approach for your research needs.

Moderated vs unmoderated testing

Moderated testing involves a facilitator who guides participants through the testing session in real-time. The moderator can ask follow-up questions, clarify instructions, and probe deeper into user behaviors and motivations.

Benefits of moderated testing:

Rich qualitative insights from real-time questioning

Ability to adapt questions based on participant responses

Deeper understanding of user motivations and thought processes

Opportunity to explore unexpected behaviors or reactions

Challenges of moderated testing:

More time-intensive to conduct and analyze

Requires skilled facilitators to avoid bias

Limited by scheduling constraints and facilitator availability

Potential for facilitator influence on participant behavior

Unmoderated testing allows participants to complete tasks independently without real-time guidance. Participants follow predetermined instructions and provide feedback through surveys, recordings, or other structured methods.

Benefits of unmoderated testing:

Faster data collection and turnaround times

More natural user behavior without facilitator influence

Easier to scale across larger participant groups

Cost-effective for gathering quantitative data

Challenges of unmoderated testing:

Limited ability to ask follow-up questions

Potential for misunderstood instructions or technical issues

Less rich qualitative insights

Difficulty exploring unexpected findings in real-time

Learn more in our video on unmoderated usability testing.

Remote vs in-person testing

Remote testing is conducted with participants in their natural environment, typically using screen-sharing software or specialized testing platforms. This approach has become increasingly popular, especially following the shift to distributed work environments.

Benefits of remote testing:

Access to geographically diverse participants

More natural user environment and behavior

Cost-effective without travel or facility requirements

Easier scheduling and logistics management

Challenges of remote testing:

Limited ability to observe body language and physical reactions

Potential technical issues or connectivity problems

Less control over testing environment and distractions

Difficulty with certain types of testing (like mobile app testing)

In-person testing brings participants to a controlled environment where researchers can observe behavior more closely and control environmental factors.

Benefits of in-person testing:

Rich observation of body language and physical reactions

Better control over testing environment and conditions

Easier to handle complex testing scenarios or technical setups

More personal connection between researcher and participant

Challenges of in-person testing:

Higher costs for facilities and participant travel

Limited geographic reach for participant recruitment

More complex scheduling and logistics

Potential for artificial behavior in lab settings

Qualitative vs quantitative testing

Qualitative testing focuses on understanding the "why" behind user behaviors. This approach generates insights about user motivations, frustrations, and thought processes.

Qualitative methods typically provide:

User comments and verbal feedback

Observations about user behavior patterns

Insights into user motivations and decision-making processes

Understanding of emotional responses to design elements

Quantitative testing emphasizes measurable metrics and statistical data about user performance and behavior patterns.

Quantitative methods typically measure:

Task completion rates and success metrics

Time-on-task and efficiency measurements

Error rates and failure points

Click patterns and navigation paths

The most effective usability testing programs combine both qualitative and quantitative approaches, using quantitative data to identify what's happening and qualitative insights to understand why it's happening.

Popular usability testing methods overview

Each usability testing method serves specific research goals and provides different types of feedback. Understanding the strengths and applications of each method helps you build a comprehensive testing strategy that addresses your product's unique needs.

The methods below represent the most commonly used approaches in UX research, each with distinct advantages for different stages of product development and types of research questions.

Here's a comparison table based on the article content:

Usability testing methods at a glance

Method | Best for | Moderation | Data type | Ideal product stage | Participant count |

|---|---|---|---|---|---|

Five second testing | First impressions, visual clarity, messaging effectiveness | Unmoderated | Qualitative | Concept, Design, Pre-launch | 15-30 |

First-click testing | Navigation intuition, button placement, task initiation | Unmoderated | Quantitative + Qualitative | Design, Pre-launch | 30-50 |

Card sorting | Information architecture, content organization, mental models | Can be either | Quantitative + Qualitative | Concept, Design | 15-30 |

Tree testing | Navigation structure, findability, site hierarchy | Unmoderated | Quantitative | Design, Pre-launch | 30-50 |

Preference testing | Design decisions, visual comparisons, feature prioritization | Unmoderated | Qualitative + Quantitative | Design | 30-100 |

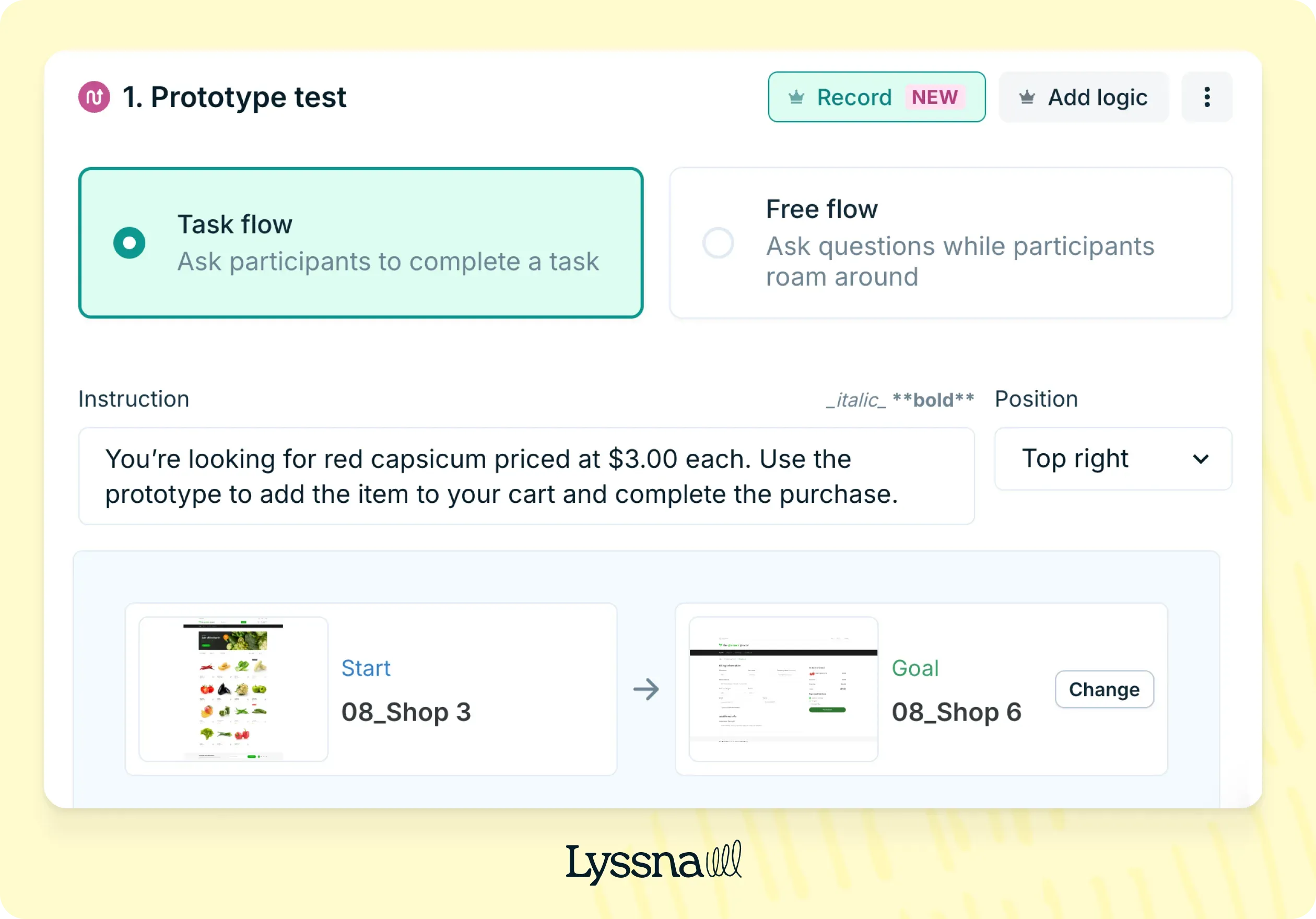

Prototype testing | User flows, interaction patterns, feature validation | Can be either | Qualitative + Quantitative | Design, Pre-launch | 5-10 |

Moderated usability testing | Deep insights, complex workflows, understanding motivations | Moderated | Qualitative | Design, Pre-launch, Post-launch | 5-10 |

Unmoderated usability testing | Task success rates, scalable feedback, natural behavior | Unmoderated | Qualitative + Quantitative | Pre-launch, Post-launch | 30-100 |

Guerrilla testing | Quick validation, early concepts, informal feedback | Moderated | Qualitative | Concept, Design | 10-20 |

First impression tests

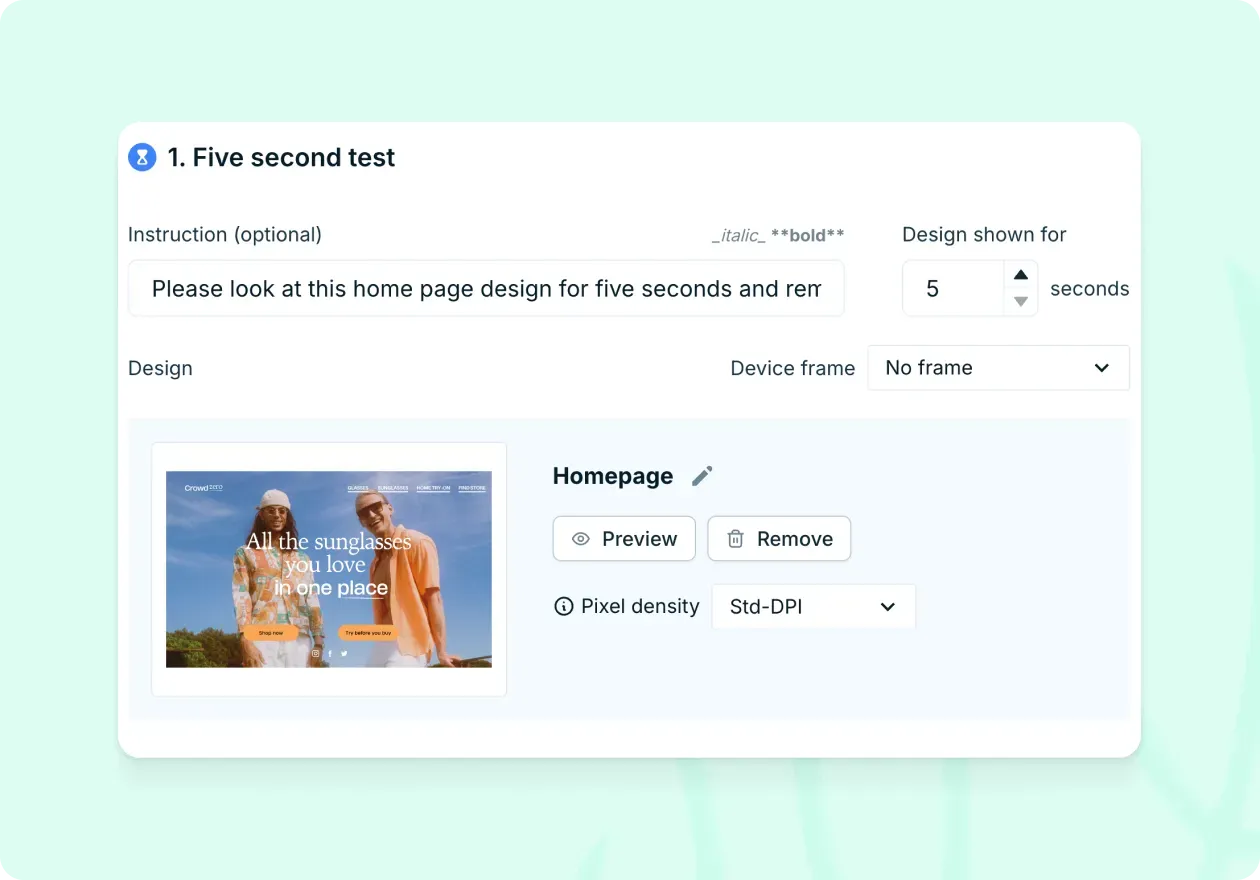

Five second testing captures users' immediate reactions and first impressions of your design. Create a five second test using Lyssna and show participants a screenshot of one of your product pages. After they've viewed the page for five seconds, ask them a series of questions to evaluate their initial impressions, such as: What do you remember about the page? What do you think this page is selling? Would you be interested in buying this product based on the page you just saw?

This method is particularly valuable for testing landing pages, home pages, and marketing materials where first impressions significantly impact user decisions. Five second testing helps you understand whether your design communicates its purpose clearly and creates the intended emotional response.

Below are Lyssna templates to common five second testing use cases:

When to use five second testing:

Early design stages when testing initial concepts

Before launching new landing pages or marketing campaigns

When you need quick feedback on visual design elements

To validate whether your design communicates its intended message

For detailed guidance on implementing this method, see our comprehensive five second testing guide.

Navigation and information architecture tests

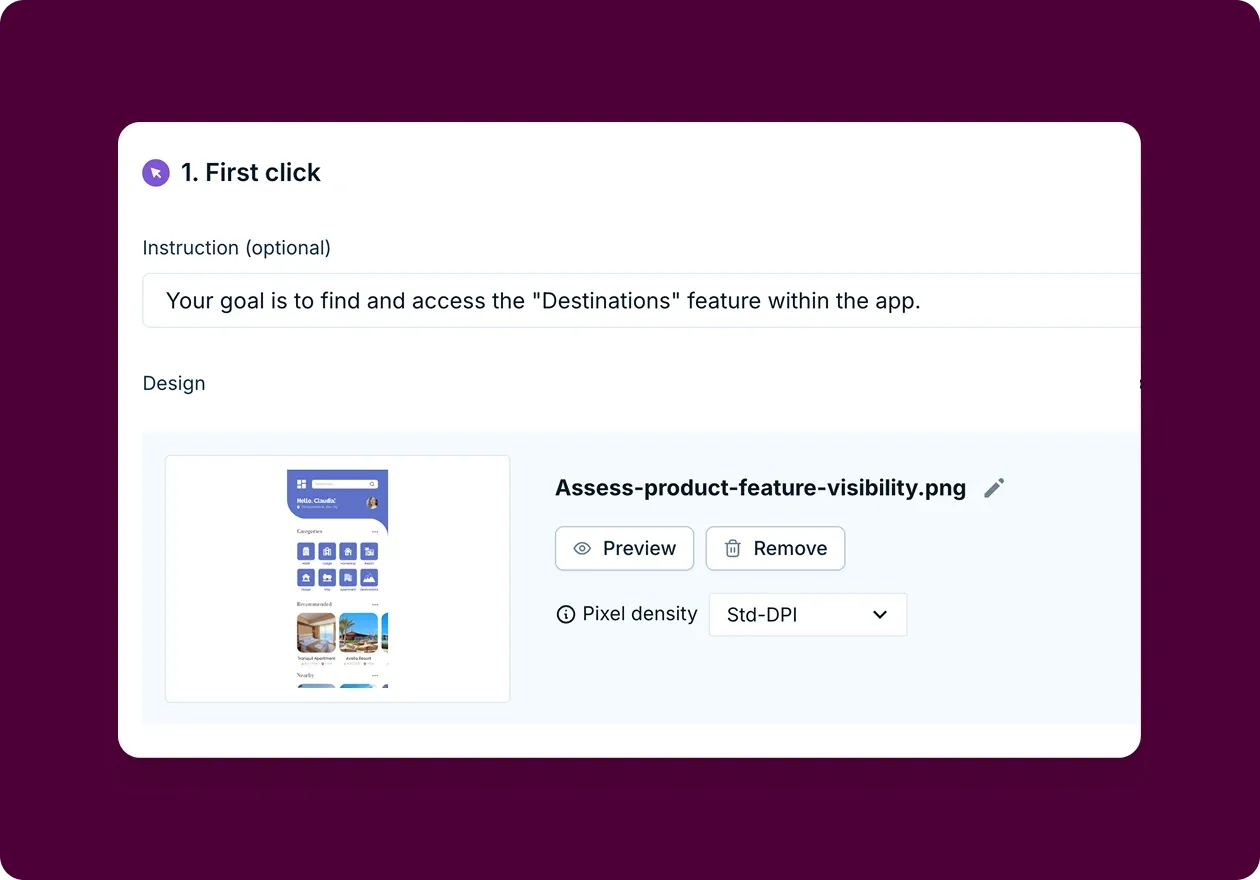

First click testing evaluates whether users can identify the correct starting point for completing tasks on your website. Let's say your SaaS company is developing a new feature for its project management tool. One of its core functionalities is being able to create a new project at the click of a button. You could conduct a first click test to see whether the 'create new project' button is clear to users by giving a specific task, such as 'Where would you go to create a new project?'.

This method is essential for understanding navigation patterns and identifying potential usability issues before they impact the full user journey.

Below are Lyssna templates to common first click testing use cases:

Card sorting helps you understand how users naturally categorize and organize information. Participants group related items together and often provide labels for their categories, revealing their mental models of your content structure.

Tree testing evaluates the findability of information within your site's hierarchy. Participants navigate through a text-based version of your site structure to complete specific tasks, helping you identify navigation problems without visual design influence.

These information architecture methods are crucial for creating intuitive navigation systems and organizing content in ways that match user expectations. For comprehensive guidance on these methods, explore our guides on first click testing, card sorting, and tree testing.

Preference and comparison tests

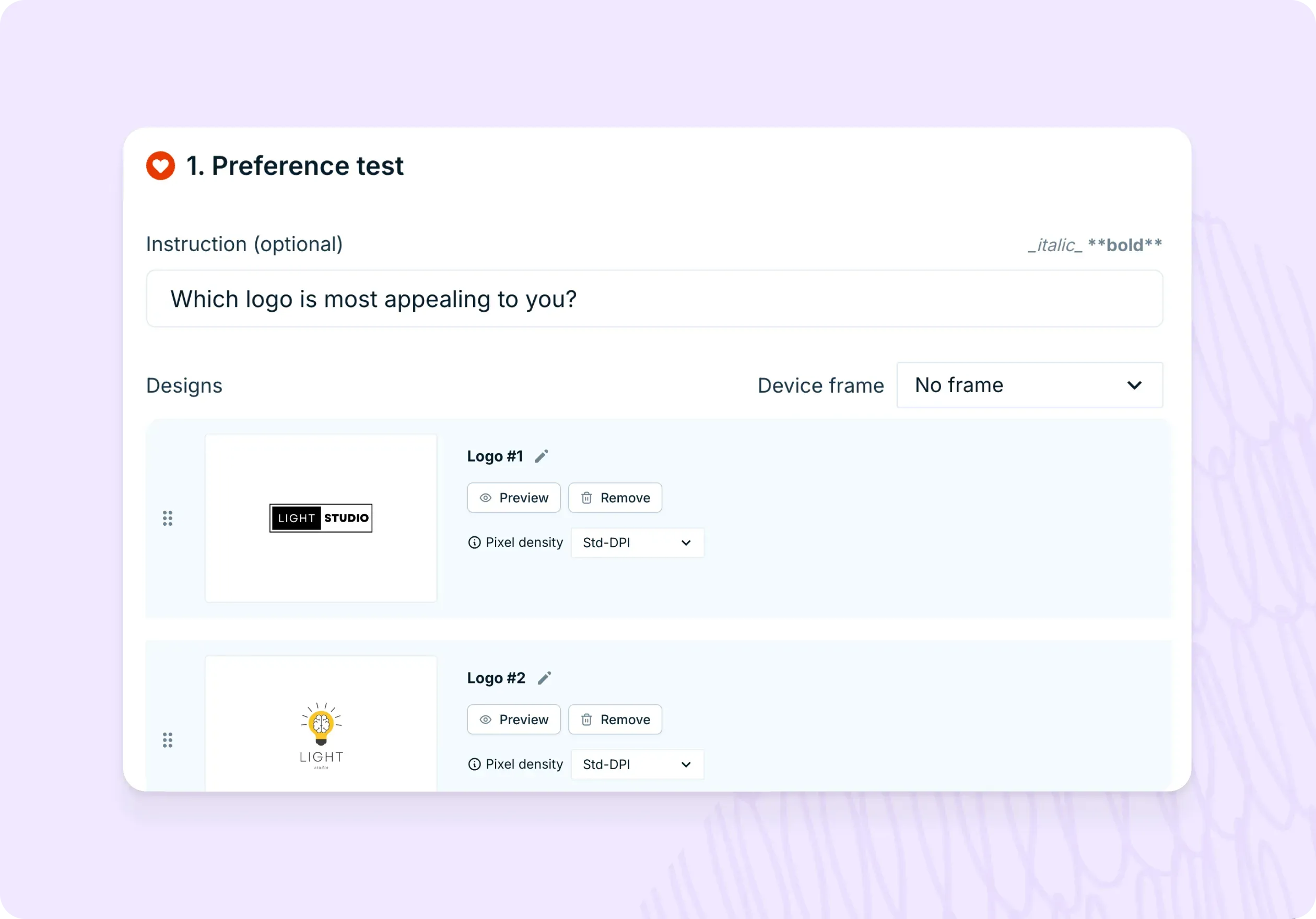

Preference testing helps you make decisions between different design options by gathering user feedback on competing approaches. This method shares similarities with comparative usability testing, where multiple designs are evaluated against the same criteria. Say your company is updating its CRM software subscription plans and you want to test four potential sign-up page options to see which one users prefer. You could set up a preference test in Lyssna with the four sign-up page designs.

This method is particularly valuable when you have multiple viable design directions and need user input to guide your decision-making process. It's important to ask participants to explain why they prefer one design over the others, as this gives you qualitative feedback that can be used to refine the design further.

Below are Lyssna templates to common preference testing use cases:

When to use preference testing:

When choosing between multiple design concepts

Before finalizing visual design elements

To validate design decisions with user feedback

When stakeholders need data to support design choices

For detailed implementation guidance, see our preference testing guide.

Prototype and concept tests

Prototype testing allows you to validate design concepts and user flows before investing in full development. Imagine you work at a media company that's developing a new podcast streaming service. Your team has created a Figma prototype that includes a feature for creating custom playlists. You could import this into Lyssna to test whether users understand how to use the feature and whether it meets their needs.

By testing your prototypes, you can identify potential problems early through design validation testing and make adjustments before investing more time and resources in building the final product.

Below are Lyssna templates to common prototype testing use cases:

When to use prototype testing:

Early in the design process with low-fidelity prototypes

Before development begins on new features

When testing complex user flows or interactions

To validate design concepts with real user behavior

For comprehensive guidance on prototype testing, explore our prototype testing guide.

Guerrilla and quick feedback tests

Guerrilla testing provides rapid, informal feedback by approaching potential users in public spaces or online communities. Guerrilla testing involves approaching people in public places and asking them to try out your product or website. You observe the user as they interact with the product, ask them questions about their experience, and take notes on any issues they encounter.

Say you're developing a new mobile app for booking restaurant reservations. You want to see how easy it is for users to navigate through the app, find a restaurant, and make a reservation. You could conduct guerrilla testing by heading to a local coffee shop and asking people passing by if they have a few minutes to test the app prototype, and offer a small incentive to encourage participation, like a gift card or a free coffee.

When to use guerrilla testing:

When you need quick feedback on early concepts

For testing simple tasks or first impressions

When formal testing isn't feasible due to time or budget constraints

To validate assumptions before investing in more comprehensive research

When should you do usability testing?

The timing of usability testing significantly impacts the value and actionability of your insights. Rather than treating usability testing as a one-time activity, the most successful teams integrate testing throughout their product development lifecycle.

Early concept stage: Use guerrilla testing, five second tests, and preference testing to validate initial ideas and design directions. At this stage, you're primarily concerned with whether users understand your concept and whether it resonates with their needs.

Design and prototyping stage: Implement prototype testing and moderated usability testing to evaluate user flows and interaction patterns. Focus on understanding whether users can complete key tasks and where they encounter friction.

Pre-launch stage: Conduct comprehensive unmoderated testing and first-click testing to identify any remaining usability issues before your product reaches real users. This is your last opportunity to catch problems before they impact user experience.

Post-launch stage: Use ongoing unmoderated testing, analytics review, and user feedback collection to monitor usability performance and identify areas for improvement. This stage focuses on optimization and iteration based on real user behavior.

During major updates: Whenever you're planning significant changes to your product, return to appropriate testing methods to validate your updates before implementation.

The key is matching your testing approach to your current product stage and the decisions you need to make. Early-stage testing should be quick and directional, while later-stage testing can be more comprehensive and detailed.

How often should you do usability testing?

The frequency of usability testing depends on your product development cycle, team resources, and the pace of change in your product. However, research shows that regular, consistent testing provides more value than sporadic, comprehensive studies.

Testing approach | Frequency | Best for | What it looks like | Key benefits |

|---|---|---|---|---|

Continuous testing | Weekly or bi-weekly | Teams with ongoing development cycles, mature products with regular updates | Quick unmoderated tests, guerrilla feedback sessions, regular user interviews integrated into weekly workflows | Catch problems early, stay connected to user needs, build a culture of user-centricity |

Sprint-based testing | Every 1-2 weeks (aligned with sprint cycles) | Agile teams, product teams using scrum or kanban methodologies | Testing at sprint end to validate completed work or at sprint start to inform upcoming development | Seamless integration with existing workflows, timely feedback for iterative development |

Feature-based testing | As needed around releases | Teams with less predictable development cycles, resource-constrained teams, product teams working on major feature launches | Comprehensive testing before and after significant feature releases or product updates | Focused insights when they matter most, efficient use of limited research resources |

Quarterly comprehensive reviews | Every 3 months | All teams (as a complement to other approaches) | Broader evaluation of overall user experience, benchmarking performance, identifying systemic issues | Big-picture perspective, track improvement over time, catch issues that emerge slowly |

The most important factor: Consistency matters more than frequency. Regular testing, even if less comprehensive, typically provides more value than infrequent but extensive testing sessions.

How to choose the right method

Selecting the appropriate usability testing method requires considering multiple factors that influence both the quality of insights you'll gather and the practical feasibility of conducting the research.

Research goals

The specific questions you're trying to answer should be your primary guide for method selection.

Testing first impressions: If you want to understand users' immediate reactions to your design, five second testing and preference testing are most effective. These methods capture gut reactions before users have time to rationalize their responses.

Evaluating task success: When you need to understand whether users can complete specific actions, moderated task-based testing and unmoderated usability testing provide the most comprehensive insights. These methods allow you to observe the complete user journey and identify specific friction points.

Assessing navigation and findability: For questions about information architecture and site organization, first-click testing, card sorting, and tree testing offer specialized insights that other methods can't provide.

Comparing design options: When choosing between multiple design directions, preference testing and A/B testing help you make data-driven decisions based on user feedback.

Understanding user motivations: For deeper insights into why users behave in certain ways, moderated testing methods provide the richest qualitative data through real-time questioning and observation.

Product stage

Your product's development stage significantly influences which testing methods will provide the most valuable insights.

Early stage (idea and concept): Focus on guerrilla testing, prototype testing, and five second testing. These methods help validate core concepts and design directions without requiring fully developed products.

Mid-stage (design and development): Use moderated task-based testing, first click testing, and preference testing to refine user flows and make design decisions. This stage benefits from more comprehensive testing that can guide development priorities.

Post-launch (optimization and iteration): Implement remote unmoderated testing, analytics review, and A/B testing to optimize existing features and identify improvement opportunities. This stage emphasizes scalable testing methods that can provide ongoing insights.

Resources (budget, time, people)

Your available resources significantly impact which testing methods are feasible for your team.

Low budget and time constraints: Unmoderated testing, guerrilla testing, and analytics review provide valuable insights with minimal resource investment. These methods can often be implemented within days and provide actionable feedback quickly.

Moderate resources: Moderated remote testing and comprehensive unmoderated studies offer deeper insights while remaining cost-effective. These approaches balance insight quality with resource efficiency.

Larger budgets: Lab-based moderated sessions, comprehensive user research studies, and longitudinal testing programs provide the richest insights but require significant time and financial investment.

Remember that the most expensive method isn't necessarily the most valuable. Often, quick and simple testing methods provide sufficient insights to make informed decisions, especially in fast-moving development environments.

Audience and accessibility

Your target audience characteristics and accessibility requirements also influence method selection.

Global reach requirements: Remote testing methods enable you to reach participants across different geographic locations and time zones, providing insights from diverse user groups.

Accessibility considerations: In-person testing often provides better opportunities for detailed observation of how users with disabilities interact with your product, allowing you to identify accessibility barriers that might not be apparent in remote testing.

Specific user groups: Some audiences are easier to reach through certain testing methods. For example, busy professionals might be more willing to participate in quick unmoderated tests than lengthy moderated sessions.

Technical comfort levels: Consider your audience's comfort with technology when selecting testing methods. Some users may struggle with remote testing tools, while others prefer the convenience of testing from their own environment.

Best practices for choosing a usability testing method

Successful usability testing programs follow several key principles that maximize the value and impact of research efforts.

Start simple, iterate often: Begin with straightforward testing methods that provide quick insights, then gradually incorporate more complex approaches as your research program matures. This approach helps you build testing habits and demonstrate value before investing in more resource-intensive methods.

Combine qualitative and quantitative methods: Use quantitative methods to identify what's happening in user behavior, then apply qualitative methods to understand why it's happening. This combination provides both the statistical confidence to make decisions and the contextual understanding to make the right decisions.

Test early, test frequently: Conduct testing throughout your development process rather than waiting until the end. Early testing catches problems when they're easier and less expensive to fix, while frequent testing ensures you stay connected to user needs as your product evolves.

Align usability testing with KPIs: Connect your testing methods to business metrics that matter to your organization. This alignment helps demonstrate the value of usability testing and ensures your research contributes to meaningful business outcomes.

Document and share insights: Establish processes for capturing, organizing, and sharing insights from your usability testing. The most valuable research is research that actually influences product decisions, which requires effective communication and documentation practices.

Build testing into your workflow: Rather than treating usability testing as an ad-hoc activity, integrate it into your regular product development processes. Creating a structured usability test plan ensures testing happens consistently and provides ongoing value to your team.

This integration ensures testing happens consistently and provides ongoing value to your team.

Ready to gather insights that matter?

Choose your testing method and start collecting user feedback with Lyssna's free plan – no credit card required.